Bots in Brief (02.2020)

Fake Flipper

Being such playful, charismatic, and just generally cool animals, it’s easy to understand why humans are so charmed by dolphins. However, their social behavior and remarkable intelligence is precisely the reason why they aren’t really suited to life in captivity. If you’ve always wanted to get up close and personal with these wonderful animals but felt morally obligated to refrain from doing so, a special effects company in America may have come up with the perfect solution.

Edge Innovations are the animatronics and special effects company behind some of cinema’s oceanic favorites, including Free Willy, Flipper, and Deep Blue Sea. Following the halt in animal trade in China in the wake of the COVID-19 pandemic, a Chinese aquarium approached Edge Innovations with the idea of creating a robotic dolphin that could replace the animals used in hands-on demonstrations. Their hope was to preserve the educational benefits of such exhibits without the need for the capture and captivity of these self-aware animals.

It appears they succeeded. The robotic dolphins can swim (or run, as it were) for 10 hours without charging and are predicted to be able to survive in salty water with other fish for up to 10 years.

The resulting technology is intended for use as an educational tool to amaze and mesmerize visitors, but is also a demonstration of the technologies Edge Innovations believe could be applied to animatronic belugas, orcas, or even great whites — all of which are unsuitable for captivity.

By creating such alternatives to living animals that can play, perform, and put up with cramped living conditions in the same way that living captive dolphins do, it’s hoped the invention will speed up the process of phasing out live animal performances from theme parks like SeaWorld.

Weighing a hefty 270 kilograms (595 pounds), the dolphins have been designed with a realistic muscular-skeletal framework beneath their artificial skin so that they can mimic the movements as well as the appearance of an adolescent bottlenose dolphin. The ensemble gives the illusion of something that is very much alive, but the robot (not yet fitted with cameras, sensors, or artificial intelligence) is actually just an elaborate puppet controlled by a nearby operator. This wizardry means the synthetic cetacean can respond to commands and interact with visitors in real time.

The creation is pretty remarkable considering it can offer a safe, ethical, and easy to maintain alternative to live animal performances, even if it does still require a human at the helm to tell it what to do.

Believe it or not, this is not a real dolphin!

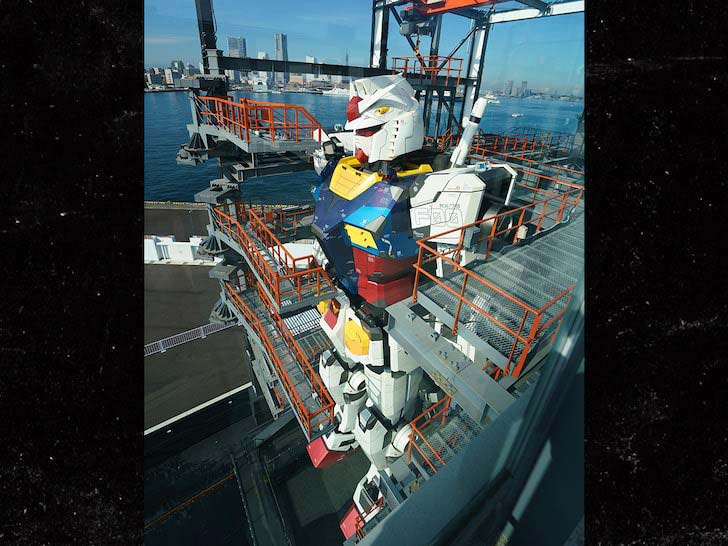

Gundam Style

No, you’re not watching Pacific Rim. This is an actual 18 meter (60 foot) robot.

Designed after the popular Gundam robot from multiple TV series and manga, this is the largest robot ever created. And it looks awesome!

The giant, true-to-life robot has gone live in Japan as the main attraction at the Gundam Factory Yokohama on Yamashita Pier.

Made with more than 20 giant moving parts, Gundam is able to roll, walk, and move its arms. It also opens its eyes and turns its head, and that’s just a teaser of what visitors at the park will be treated to. The robot will display a new pose every half hour, plus it can kneel and flex its fingers.

Visitors at the park (which was rescheduled to open in December after being postponed in October because of the pandemic) will be offered close-up views of the robot by climbing the observation decks situated between 15 and 18 meters (49.2 to 59 feet) high on the Gundam-Dock Tower. A Gundam-Lab exhibition facility is available on-site, including a virtual reality dome “to simulate sitting inside the cockpit of the 25 ton mobile suit.”

Gundam Factory Yokohama is scheduled to be open through March 2022.

Super-powered Team Member

Ateam from the University of Liverpool has designed a robot that is capable of rapidly performing chemistry experiments up to 1,000 times faster than its human counterparts.

“Our strategy here was to automate the researcher, rather than the instruments. This creates a level of flexibility that will change both the way we work and the problems we can tackle,” Professor Andrew Cooper from the University’s Department of Chemistry and Materials Innovation Factory said in a recent statement. “This is not just another machine in the lab. It’s a new super-powered team member, and it frees up time for the human researchers to think creatively.”

The robot is designed to be small and fit into the average lab setting, making it versatile for an array of different tasks. It’s also capable of moving itself around a lab. Using a single robotic arm, the robot worked for over eight days straight and completed 688 experiments with a very low error rate.

The task was to choose between a variety of different samples, operations, and measurements to identify a photocatalyst that is more sensitive than those used today. Photocatalysts are incredibly important in the race to create clean energy, as they produce hydrogen from water when exposed to sunlight. Hydrogen is used extensively in power generation and large industrial processes like fertilizer and petroleum refining, and producing it in huge quantities is vital.

Autonomously choosing which experiments to perform out of a possible 98 million different options, the robot made a huge discovery: a photocatalyst six times more sensitive than those currently available. The team hopes that the catalyst will be helpful in lowering the environmental impact of hydrogen production, and the robot can continue to aid in material discoveries.

Cyborg Cockroaches

Digital Nature Group at the University of Tsukuba in Japan is working towards a “post ubiquitous computing era consisting of seamless combination of computational resources and non-computational resources.” By “non-computational resources,” they mean leveraging the natural world, which for better or worse includes insects.

At small scales, the capabilities of insects far exceed the capabilities of robots. However, do you really want cyborg cockroaches hiding all over your house all the time?

Remote controlling cockroaches isn’t a new idea, and it’s a fairly simple one. By stimulating the left or right antenna nerves of the cockroach, you can make it think that it’s running into something and get it to turn in the opposite direction. Add wireless connectivity, some fiducial markers, an overhead camera system, and a bunch of cyborg cockroaches, and you have a resilient swarm that can collaborate on tasks.

The researchers suggest that the swarm could be used as a display (by making each cockroach into a pixel), to transport objects or to draw things. There’s also some mention of “input or haptic interfaces or an audio device,” which is a little unnerving.

The reason to use cockroaches is because of their impressive ruggedness, efficiency, high power-to-weight ratio, and mobility. Whenever you don’t need the swarm to perform some task, you can deactivate the control system. When you do need them again, turn the control system on and experience the nightmare of your cyborg cockroach swarm reassembling itself from all over your house. (Yikes!)

Virus Killer

MIT has developed a robot capable of disinfecting an entire 4,000 square foot warehouse in 30 minutes, that may soon be used in schools and grocery stores.

Using a type of UV-C light to kill viruses and other harmful microorganisms, the robot can disinfect huge areas very quickly with little assistance. It was designed in a collaboration that began back in April 2020 between MIT University’s Computer Science and Artificial Intelligence Laboratory (CSAIL), Ava Robotics, and the Greater Boston Food Bank (GBFB) to try and combat the ongoing coronavirus pandemic.

Since the bot is capable of completely killing coronavirus wherever the UV light touches, researchers believe that it could be a good option for disinfecting public stores and restaurants to limit further spread of the virus.

UV-C lights emit a wavelength of ultraviolet light that can sterilize surfaces and liquids rapidly and cheaply. The UV-C attacks the DNA of microorganisms directly, disrupting it and killing the pathogen. As a result, UV-C is able to kill tough microorganisms that other methods may struggle with, including MRSA and airborne pathogens.

The robot features a UV-C array built on top of a mobile robot (previously built by Ava Robotics) that is completely autonomous and free from any human supervision. The robot maps the given space and creates waypoints, delivering set doses of UV at each checkpoint, and successfully disinfected the entire GBFB warehouse in 30 minutes. It’s designed to either aid or replace chemical sterilization, which can be expensive and time-consuming.

It’s hoped that reducing manpower and having effective sterilization through the use of robots could free up labor for helping combat the crisis, while effectively protecting workers from COVID-19.

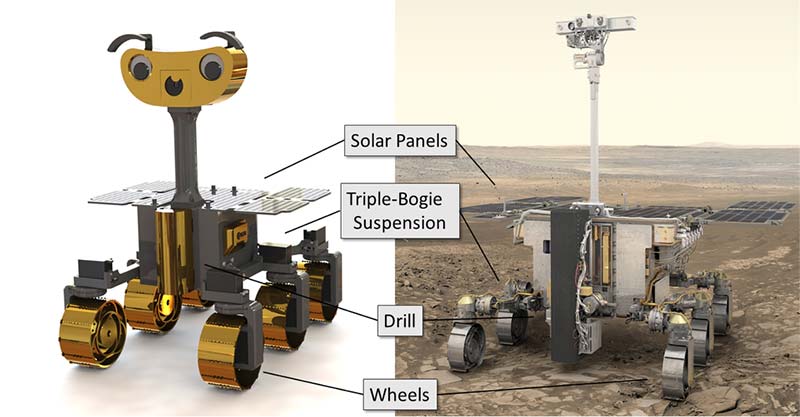

DIY Mars Rover

Europe’s Rosalind Franklin ExoMars rover has a younger ’sibling:’ ExoMy. The blueprints and software for this mini-version of the full-size Mars explorer are available for free so that anyone can 3D print, assemble, and program their own ExoMy.

The six-wheeled ExoMy rover was designed by ESA’s Planetary Robotics Laboratory, which specializes in developing locomotion platforms and navigation systems to support ESA’s planetary exploration missions.

According to Swiss trainee Miro Voellmy, anyone with a 3D printer can build their own ExoMy, at an estimated budget of approximately $600. The source code is available on GitHub along with a step-by-step assembly guide and tutorials.

AI in the Army Now

Say there’s a dismounted squad navigating rigorous terrain amid high-intensity combat, tasked with finding enemy Humvees, yet the enemy targets are dispersed and hidden.

What if that squad used AI and computers to find the enemy instead of trying to overcome all of their environmental and line-of-sight challenges? Enemy force location patterns and information from multiple soldiers’ viewpoints might all be instantly calculated and fed back to soldiers and decision-makers in a matter of seconds.

This concept — designed to use biological elements of the human brain as sensors — is fast evolving at the Army Research Laboratory. The science is based on connecting high-tech AI-empowered sensors with the electrochemical energy emerging from the human brain. A signal from the brain can be captured “before the brain can cognitively do something,” according to scientists.

Electrical signals emitted by the human brain resulting from visual responses to objects seen can be instantly harnessed and merged with analytical computer systems to identify moments and locations of great combat relevance. This is accomplished by attaching a conformal piece of equipment to soldier’s glasses, engineered to pick up and transmit neurological responses.

A US Army Combat Action Badge is pinned on the uniform of a soldier (photo courtesy of Scott Olson/Getty Images).

“The computer can now map it if, when a soldier looks at something, it intrigues them. The human brain can be part of a sensing network,” J. Corde Lane, Ph.D., director, Human Research and Engineering, Combat Capabilities Development Command, Army Research Laboratory, told Warrior in a recent interview.

Think of an entire group of soldiers all seeing something at once, yet from different angles. That response data can then instantly be aggregated and analyzed to (if needed) dispatch a drone, call for air support, or direct ground fires to a specific target. A collective AI system can gather, pool, and analyze input from a squad of soldiers at one time, comparing responses to one another to paint an overall holistic combat scenario picture.

“With opportunistic sensing, we can identify where those Humvees are. Now I know dynamically where the threat objects are for the mission. This group of individuals has given me that information without them having to radio back. Information is automatically extracted by soldiers doing their normal behavior,” John Touryan, researcher, Cognitive Neural Sciences, Army Research Lab, commented.

AI-empowered machine learning can factor prominently here, meaning the computer analytics process can identify patterns and other interwoven variables to accurately forecast where other Humvees might be based on gathered information. Such a technology might then accurately direct soldiers to areas of great tactical significance.

Touryan explained it this way, saying that an AI system could, in effect, say “this group of soldiers is very interested in Humvees and vehicles, so let me analyze the rest of the environment and find out where those are, so that when they come around the corner they are not going to be surprised that there is a Humvee right there.”

By drawing upon an integrated database of historical factors, previous combat, and known threat objects, the AI-generated computer system could even alert soldiers about threats they may not be seeing. The computer could, as Touryan puts it, find “blind spots.”

“What we hope is that within this framework, AI will understand the human and not just be rigid. We want it to understand how soldiers are reacting to the world,” Lane explained.

Dropping Dragon Eggs

As the land burns, Dragon Eggs rain down from the sky, each one exploding into flames as it hits the ground.

An apocalyptic scene from some Game of Thrones-type fantasy show? Nope. It’s the real-life efforts of a company called Drone Amplified (https://droneamplified.com), and while it might sound counterintuitive, the idea is that bombarding the land with these miniature fireballs can actually help fight blazes like the devastating wildfires that continually ravage the West Coast.

“The Dragon Eggs are a brand name for a specific type of what is more generally known as an ignition sphere,” Carrick Detweiler, CEO of Drone Amplified, told Digital Trends recently. “The ignition spheres have been used for decades by manned helicopters to perform prescribed burns and backburns on wildfires. One of the main ways to contain wildfires is to use backburns to remove the fuels [such as] dead wood in advance of the main wildfire. This then allows firefighters to contain and put out the wildfire.”

The ignition spheres contain potassium permanganate and — while they’re capable of exploding into flames when required — they’re safe to handle and transport. This means that they can be carried on a drone and then used to disperse over areas.

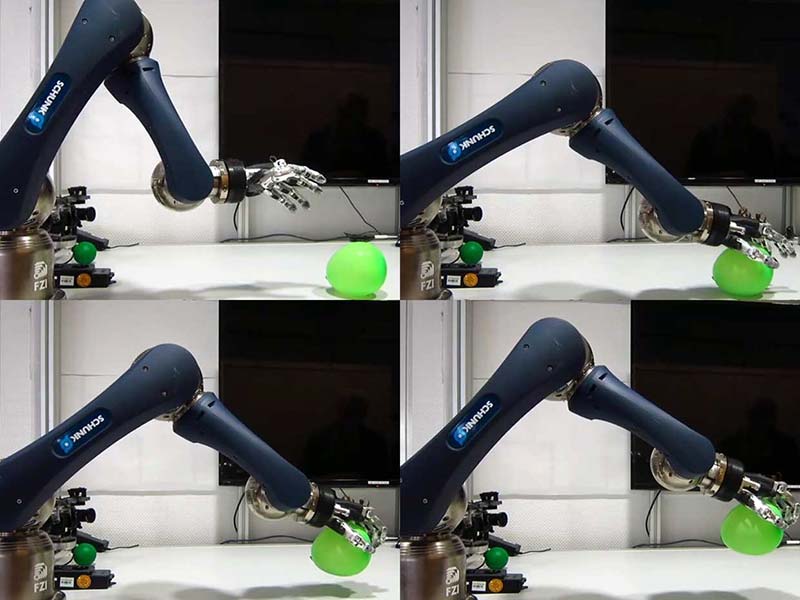

Pick-up Artist

Reaching for a nearby object seems like a mindless task, but the action requires a sophisticated neural network that took humans millions of years to evolve. Now, robots are acquiring that same ability using artificial neural networks.

In a recent study, a robotic hand “learned” to pick up objects of different shapes and hardness using three different grasping motions.

The key to this development is something called a “spiking neuron.” Like real neurons in the brain, artificial neurons in a spiking neural network (SNN) fire together to encode and process temporal information. Researchers study SNNs because this approach may yield insights into how biological neural networks function, including our own.

“The programming of humanoid or bio-inspired robots is complex,” says Juan Camilo Vasquez Tieck, a research scientist at FZI Forschungszentrum Informatik in Karlsruhe, Germany. “And classical robotics programming methods are not always suitable to take advantage of their capabilities.”

Conventional robotic systems must perform extensive calculations, Tieck says, to track trajectories and grasp objects. However, a robotic system like Tieck’s — which relies on a SNN — first trains its neural net to better model system and object motions. After this, it grasps items more autonomously by adapting to the motion in real time.

The new robotic system by Tieck and his colleagues uses an existing robotic hand called a Schunk SVH five-finger hand, which has the same number of fingers and joints as a human hand.

The researchers incorporated a SNN into their system, which is divided into several sub-networks. One sub-network controls each finger individually, either flexing or extending the finger. Another concerns each type of grasping movement; for example, whether the robotic hand will need to do a pinching, spherical, or cylindrical movement.

For each finger, a neural circuit detects contact with an object using the currents of the motors and the velocity of the joints. When contact with an object is detected, a controller is activated to regulate how much force the finger exerts.

“This way, the movements of generic grasping motions are adapted to objects with different shapes, stiffness, and sizes,” says Tieck. The system can also adapt its grasping motion quickly if the object moves or deforms.

The researcher’s robotic hand used its three different grasping motions on objects without knowing their properties. Target objects included a plastic bottle, a soft ball, a tennis ball, a sponge, a rubber duck, different balloons, a pen, and a tissue pack. The researchers found, for one, that pinching motions required more precision than cylindrical or spherical grasping motions.

“For this approach, the next step is to incorporate visual information from event-based cameras and integrate arm motion with SNNs,” says Tieck. “Additionally, we would like to extend the hand with haptic sensors.”

The long-term goal, he says, is to develop “a system that can perform grasping similar to humans, without intensive planning for contact points or intense stability analysis, and [that is] able to adapt to different objects using visual and haptic feedback.”

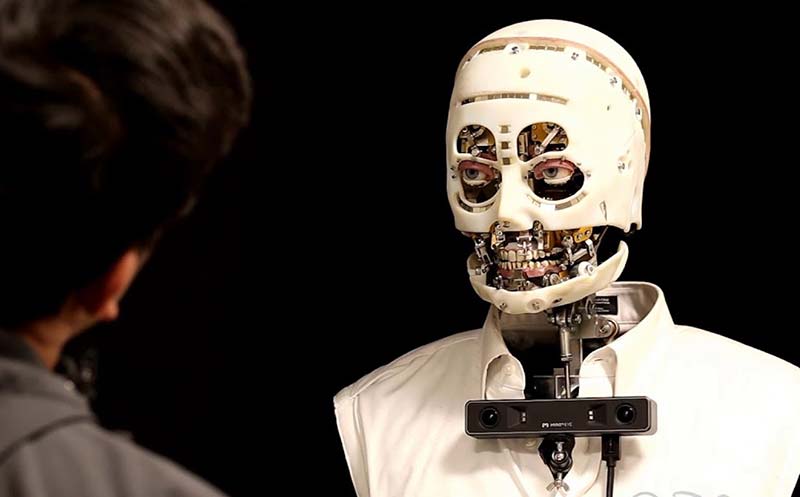

Look Into My Eyes

While it’s not totally clear to what extent human-like robots are better than conventional robots in most applications, one area for certain is entertainment. The folks over at Disney Research (who are all about entertainment) have been working on this sort of thing for a very long time, and some of their animatronic attractions are quite impressive.

The next step for Disney is to make its animatronic figures (which currently feature scripted behaviors) to perform in an interactive manner with visitors. The challenge is that this is where you start to enter Uncanny Valley territory, which is what happens when you try to create “the illusion of life,” which is what Disney (they explicitly say) is trying to do.

The approach Disney is using is more animation than biology or psychology. In other words, they’re not trying to figure out what’s going on in our brains to make our eyes move the way that they do when we’re looking at other people and basing their control system on that. Instead, Disney just wants it to look right.

This “visual appeal” approach is totally fine, and there’s been an enormous amount of human-robot interaction (HRI) research behind it already, albeit usually with less explicitly human-like platforms. Speaking of human-like platforms, the hardware is a “custom Walt Disney Imagineering Audio-Animatronics bust,” which has DoFs that include neck, eyes, eyelids, and eyebrows.

In order to decide on gaze motions, the system first identifies a person to target with its attention using an RGB-D camera. If more than one person is visible, the system calculates a curiosity score for each, currently simplified to be based on how much motion it sees. Depending on which person the robot can see has the highest curiosity score, the system will choose from a variety of high level gaze behavior states, including:

Read: The Read state can be considered the “default” state of the character. When not executing another state, the robot character will return to the Read state. Here, the character will appear to read a book located at torso level.

Glance: A transition to the Glance state from the Read or Engage states occurs when the attention engine indicates that there is a stimuli with a curiosity score [...] above a certain threshold.

Engage: The Engage state occurs when the attention engine indicates that there’s a stimuli [...] to meet a threshold and can be triggered from both Read and Glance states. This state causes the robot to gaze at the “person of interest” with both the eyes and head.

Acknowledge: The Acknowledge state is triggered from either Engage or Glance states when the person of interest is deemed to be familiar to the robot.

Running underneath these higher level behavior states are lower level motion behaviors like breathing, small head movements, eye blinking, and saccades (the quick eye movements that occur when people look between two different focal points). The term for this hierarchical behavioral state layering is a subsumption architecture, which goes all the way back to Rodney Brooks’ work on robots like Genghis in the 1980s and Cog and Kismet in the 1990s. It provides a way for more complex behaviors to emerge from a set of simple, decentralized low-level behaviors.

This robot uses a subsumption architecture to exhibit more realistic eye gaze. Photo courtesy of Disney Research.

Cozy Up With Snugglebot

Loneliness is an increasing problem in today’s world, especially during the current pandemic when people are finding themselves increasingly isolated. Social robotics research has highlighted how robots can be designed to support people and improve their mood and general wellness. Snugglebot is what we all need right now.

Snugglebot is a cuddly robotic companion that needs your love and attention. It needs to be taken care of, cuddled, and kept warm. It’s physically comforting (soft, warm, and weighted) and engaging. Its tusk lights up and it wiggles to get attention or to show appreciation when it’s hugged.

This robot prototype is the brainchild of Danika Passler Bates, a student in the Department of Computer Science and a recipient of an Undergraduate Summer Research Award (URA). Bates saw a need to help people feel better, especially those in isolation during the pandemic. Her research indicated that caring for something (like a pet) often improves an individual’s wellness and motivation. The person has to take care of it by keeping the microwaveable compress (in its pouch) warm, helping to create a connection.

Photo courtesy of University of Manitoba.

Robot Space Claw

Remember those arcade grabbing machines? Well, a much larger version of that same concept could soon be shot into orbit and used to clean up space junk — the hundreds of thousands of pieces of space debris which orbit the Earth at unimaginable speeds, threatening to cause catastrophic damage to any satellite or spaceship they might collide with.

That, in essence, is the idea behind a new project sponsored by the European Space Agency (ESA) that will see Swiss startup, ClearSpace construct and launch a debris removal robot (a giant space claw by any other name) called the ClearSpace-1.

The enormous space claw will seek out and grab large bits of space detritus and then send them hurtling towards Earth where (fortunately) they’ll burn up in the atmosphere.

“The capture system is based on four arms that are actuated to close when the target is inside the capture volume,” a spokesperson for ClearSpace told Digital Trends recently. “This system is simple and also ensures reusability [since] it can be opened and closed, and so used many times. Its design can adapt to the different shapes that space debris may have — from rocket bodies to defunct satellites.”

ClearSpace-1’s first target for cleanup is an old Vespa payload adapter: a rocket part that on Earth would weigh 250 pounds and has been in orbit since 2013. Once that cleanup job is done, presumably ClearSpace-1 will move on to other pieces of debris that it can shift from orbit.

With such a significant number of space debris to clear up (and many of them under 10 cm in length making them much harder to collect), it will be quite a while before orbit truly is a “clear space.” However, providing all goes to plan, things will undoubtedly be off to a strong start. This could wind up being the first space claw of many.

“The development of the service to ESA is four years long, and we are [currently] in the preliminary design stage,” the spokesperson said. “The launch is planned in 2025.”

Dream Reader

Google search queries and social media posts provide a means of peeking into the ideas, concerns, and expectations of millions of people around the world. Using the right web-scraping bots and big data analytics, everyone from marketers to social scientists can analyze this information and use it to draw conclusions about what’s on the mind of massive populations of users.

Could AI (artifical intelligence) analysis of our dreams help do the same thing? That’s an interesting concept, and it’s one that researchers from Nokia Bell Labs in Cambridge, U.K., have been busy exploring.

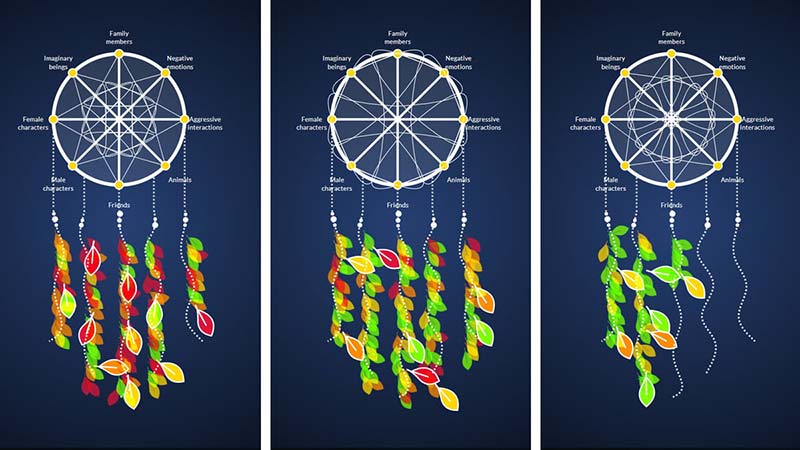

They’ve created a tool called “Dreamcatcher” that can (so they claim) use the latest Natural Language Processing (NLP) algorithms to identify themes from thousands of written dream reports.

Dreamcatcher is based on an approach to dream analysis referred to as the continuity hypothesis. This hypothesis, which is supported by strong evidence from decades of research into dreams, suggests that our dreams are reflections of the everyday concerns and ideas of dreamers.

The AI tool — which Luca Aiello, a senior research scientist at Nokia Bell Labs, told Digital Trends is an “automatic dream analyzer.” It parses written descriptions of dreams and then scores them according to an established dream analysis inventory called the Hall-Van De Castle scale.

“This inventory consists of a set of scores that measure by how much different elements featured in the dream are more or less frequent than some normative values established by previous research on dreams,” Aiello said. “These elements include, for example, positive or negative emotions, aggressive interactions between characters, presence of imaginary characters, et cetera. The scale, per se, does not provide an interpretation of the dream, but it helps quantify interesting or anomalous aspects in them.”

The written dream reports came from an archive of 24,000 such records taken from DreamBank — the largest public collection of English language dream reports yet available. The team’s algorithm is capable of pulling these reports apart and reassembling them in a way that makes sense to the system.

For instance, by sorting references into categories like “imaginary beings,” “friends,” “male characters,” “female characters,” and so on, it can then further categorize these categories by filtering them into groups like “aggressive,” “friendly,” or “sexual” to indicate different types of interaction.

Pictionary Robot Style

Like new Alexa Skills on your Amazon Echo, these past couple of decades have seen AI gradually gain the ability to best humanity at more and more of our beloved games: Chess with Deep Blue in 1997; Jeopardy with IBM Watson in 2011; Atari games with DeepMind in 2013; Go with AlphaGo in 2016; and so on.

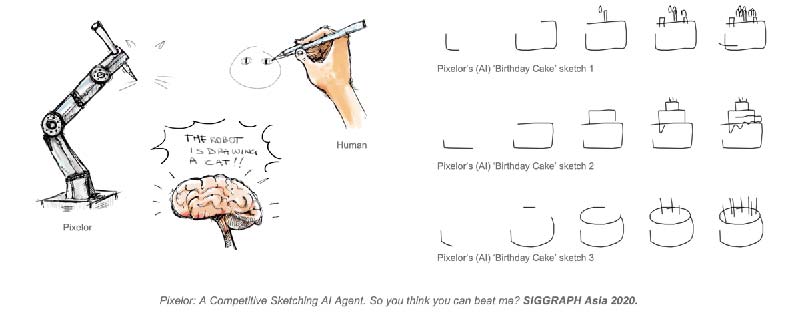

With that background, it’s not too much of a shocker to hear that AI can now perform compellingly well at Pictionary: the charades-inspired word guessing game that requires one person to draw an image and others to try to figure out what they’ve sketched as quickly as possible.

That’s what researchers from the U.K.’s University of Surrey recently carried out with the creation of Pixelor: a “competitive sketching AI agent.” Given a visual concept, Pixelor is able to draw a sketch that is recognizable (both by humans and machines) as its intended subject as quickly — or even faster — than a human competitor.

“Our AI agent is able to render a sketch from scratch,” Yi-Zhe Song, leader of Computer Vision and Machine Learning at the Center for Vision Speech and Signal Processing at the University of Surrey, commented recently. “Give it a word like ‘face’ and it will know what to draw. It will draw a different cat, a different dog, a different face, every single time. But always with the knowledge of how to win the Pictionary game.”

Being able to reduce a complex real world image into a sketch is pretty impressive. It takes a level of abstraction to look at a human face and see it as an oval with two smaller ovals for eyes, a line for a nose, and a half-circle for a mouth. In kids, the ability to perceive an image in this way shows (among other things) a burgeoning cognitive understanding of concepts.

However, as with many aspects of AI, often summarized as Moravec’s Paradox that the “hard problems are easy and the easy problems are hard,” it’s a significant challenge for machine intelligence — despite the fact that it’s a basic, unremarkable skill for the majority of two-year-old children.

There’s more to Pixelor than just another trivial game-playing bot, however. Just like a computer system has both a surface-level interface that we interact with and under-the-hood backend code, so, too, does every major AI game-playing milestone have an ulterior motive.

Unless they’re explicitly making computer games, research labs don’t spend countless person-hours building game-playing AI agents just to add another entry on the big list of things humans are no longer the best at. The purpose is always to advance some fundamental part of AI problem-solving.

In the case of Pixelor, the hidden objective is to make machines that are better able to figure out what’s important to a human in a particular scene.

Confections of a Robot

The world of experience of Zotter Schokoladen Manufaktur managing director, Josef Zotter counts more than 270,000 visitors annually. Since March 2019, this world of chocolate in Bergl near Riegersburg in Austria has been enriched by a new attraction: the world’s first chocolate and praline robot from KUKA.

KUKA delights young and old alike, and serves up chocolate and pralines to guests according to their personal taste.

Open Source Bionic Leg Kicks ...

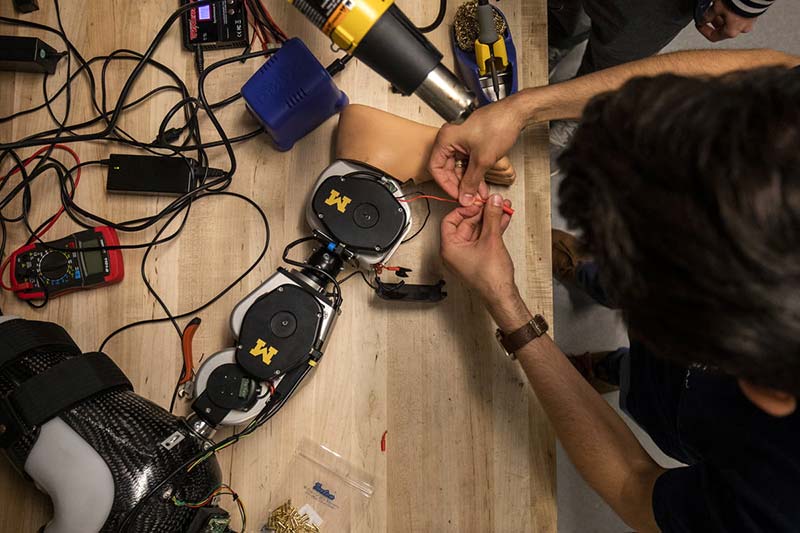

If you wanted to cover a large distance and had the world’s best sprinters at your disposal, would you have them run against each other or work together in a relay? That — in essence — is the problem Elliott Rouse, a biomedical engineer and director of the Neurobionics Lab at the University of Michigan, Ann Arbor, has been grappling with for the best several years.

Rouse is one of many working to develop a control system for bionic legs — artificial limbs that use various signals from the wearer to act and move like biological limbs.

“Probably the biggest challenge to creating a robotic leg is the controller that’s involved, telling them what to do,” Rouse stated recently. “Every time the wearer takes a step, a step needs to be initiated. And when they switch, the leg needs to know their activity has changed and move to accommodate that different activity. If it makes a mistake, the person could get very, very injured — perhaps falling down some stairs, for example. There are talented people around the world studying these control challenges. They invest years of their time and hundreds of thousands of dollars building a robotic leg. It’s the way things have been since this field began.”

When it comes to control systems for bionic limbs, the problem is that, in order to even be able to start developing control systems, individual research labs around the world first have to build the underlying hardware.It would be like Apple and Samsung having to build their own computer operating systems from scratch before starting to design their next-generation smartphone.

This is where Rouse’s project — the Open-Source Leg — comes into play. As the researchers behind it explained: “The overarching purpose of this project is to unite a fragmented field[R]esearch in prosthetic hardware design, prosthetic control, and amputee biomechanics is currently done in silos. Each researcher develops their own robotic leg system on which to test their control strategies or biomechanical hypotheses. This may be successful in the short term since each researcher produces publications and furthers knowledge. However, in the long term, this fragmented research approach hinders results from impacting the lives of individuals with disabilities — culminating in an overarching failure of the field to truly have the impact that motivated it.”

Photos courtesy of Joseph Xu/Michigan Engineering, Communications & Marketing.

The Open-Source Leg is (as its name suggests) an open-source bionic leg that could become the ubiquitous hardware system for facilitating growth in the area of prostheses control. The design is simple (easily assembled), portable (lightweight and powered by onboard batteries), economical (it costs between $10,000 and $25,000, compared to the $100,000-plus commercially available powered prosthetics), scalable, and customizable.

Detailed instructions are available online to follow at https://opensourceleg.com and Rouse and his colleagues will even build them and ship them out to researchers when creating one themselves is not possible.

Article Comments