Bots in Brief

Got Your Goat

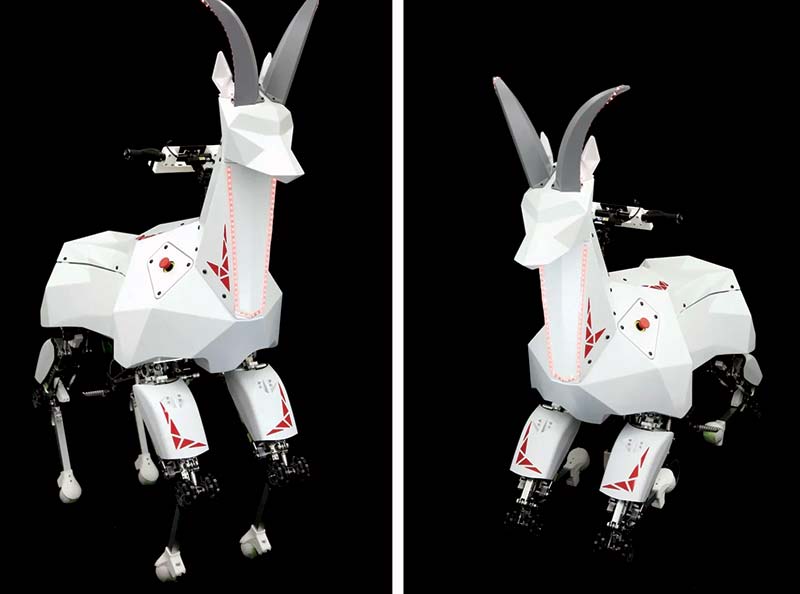

This is Bex. It’s a robotic ibex designed by Kawasaki and inspired by the fiercely horny species of goat native to many mountainous regions of North African and the Middle East. Bex made its first appearance at iREX held earlier this year and unlike a real ibex, Kawasaki’s version comes with an added feature of wheeled knees.

When the robot kneels down and deploys a pair of drive wheels under its belly, it can drive around instead of walking. The omni-directional knee wheels and dual center drive wheels also give the robot the ability to perform a tank-steer maneuver or turn in place.

The Bex robot was outfitted with a passenger-carrying back and handlebars that support a rider up to 100 kg (220 lb), with the rider able to control the robot via the handlebars.

Bex under development at Kawasaki Robotics. Photo courtesy of Kawasaki Robotics.

Kawasaki has been working on a “Robust Humanoid Platform” (RHP) called Kaleido since 2015, and Bex is a “friend” of that program. Masayuki Soube, who is in charge of development of the RHP, was interviewed recently on Kawasaki Robotics’ website about the Bex program. The conversation was conducted in Japanese, but here is Google Translate’s best attempts at translating Soube’s observations into workable English:

Through the development of Kaleido, we felt the difficulty of biped robots. Because humanoid robots have the same shape as humans, they are highly versatile, with the potential to do everything that humans can ultimately do. However, it will take a long time to put it to practical use. On the other hand, we are also developing a self-propelled service robot that moves on wheels, but legs are still suitable for moving on rough terrain rather than wheels. So, halfway between humanoid robots and wheeled robots, [we] wondered if there was an opportunity. That’s why we started developing Bex, a quadruped walking robot. We believe that the walking technology cultivated in the development of humanoid robots can definitely be applied to quadruped walking robots.

Further comments from Soube on more practical applications were included in the translation:

First of all, Bex can carry light cargo, such as transporting materials at a construction site. The other application is inspection. In a vast industrial plant, Bex can look around and take images from its camera that can be remotely checked to see what the instruments are doing. Bex can also carry crops harvested by humans on farmland. Although the base of Bex is a legged robot, the upper body of Bex is not fixed and we are thinking of adapting it according to the application. If it is a construction site, we will form a partnership with the construction manufacturer, and if it is a plant, we will form a partnership with the plant manufacturer and leave the upper body to us. Kawasaki Heavy Industries will focus on the four legs of the lower body and want to provide it as an open innovation platform.

Officials with Kawasaki noted that the robot’s head can be replaced with other suitable alternatives such as a horse’s head or even nothing at all. They also noted that Bex has been engineered to move quickly in its wheeled configuration and that the walking configuration is to deal with uneven terrain. Also, the team put stability at the forefront.

Bex is inspired by the ibex, a mountain goat that is incredibly tough. Photo courtesy of Getty Images.

When the robot is rolling, all its wheels are always on the ground. When it’s walking, its gait keeps at least two feet on the ground. This reduced computation requirements and made the robot safer to use around humans.

Open Wide

The process of taking oropharyngeal-swab sampling is dull, dirty, and dangerous all at once, making it an ideal task for a robot to do instead — as long as that robot can avoid violently stabbing you in the throat.

In a recent paper published in IEEE Robotics and Automation Letters, researchers from the Shenzhen Institute of Artificial Intelligence and Robotics for Society introduced a robot that’s designed to protect the safety of medical staff during the sampling process as well as free them up to do more useful and less gross things. Let’s face it, taking these samples is not fun for anyone.

ViKiNG Lays Waste to Traditional Navigation

The way robots navigate is very different from the way most humans navigate. Robots perform best when they have total environmental understanding with some sort of full geometric reconstruction of everything around them, plus exact knowledge of their own position and orientation. LiDARs, preexisting maps, powerful computers, and even motion-capture systems help fulfill these demands.

However, this stuff doesn’t always scale that well. With that in mind, Dhruv Shah and professor Sergey Levine at the University of California, Berkeley, are working on a different approach. Their take on robotic navigation does away with high-end power-hungry components. What suffices for their navigation technique are a monocular camera, some neural networks, a basic GPS system, and some simple hints in the form of a very basic human-readable overhead map. Such hints may not sound all that impactful, but they enable a very simple robot to efficiently and intelligently travel through unfamiliar environments to reach far-off destinations.

Navigation bascially consists of understanding where you are, where you want to go, and how you want to get there. For robots, this is the equivalent of a long-term goal. Some far-off GPS coordinate can be reached by achieving a series of short-term goals, like staying on a particular path for a few meters. Achieve enough short-term goals and you reach your long-term goal.

However, there’s a sort of medium-term goal in the mix too, which is especially tricky because it involves making more complex and abstract decisions about what the “best” path might be. In other words, which combination of short-term goals best serves the mission to reach the long-term goal.

This is where the hints come in for ViKiNG. Using either a satellite map or a road map, the robot can make more informed choices about what short-term goals to aim for, vastly increasing the likelihood that it’ll achieve its objectives. Even with a road map, ViKiNG is not restricted to roads; it just may favor roads because that’s the information it has.

Satellite images (which include roads and other terrain) give the robot more information to work with. The maps are hints — not instructions — which means that ViKiNG can adapt to obstacles it wasn’t expecting. Of course, maps can’t tell the robot exactly where to go at smaller scales (whether those short-term goals are traversable or not), but ViKiNG can handle that by itself with just its monocular camera.

If this little robot looks familiar, it’s because you may have seen it a couple of years ago through Greg Khan, a student of Levine’s. Back then, the robot was named BADGR, and its special skill was learning to navigate through novel environments based on simple images and lived experience (whatever that is for the robots). BADGR has now evolved into ViKiNG, which stands for “Vision-Based Kilometer-Scale Navigation with Geographic Hints.”

Weird Kind of Necking

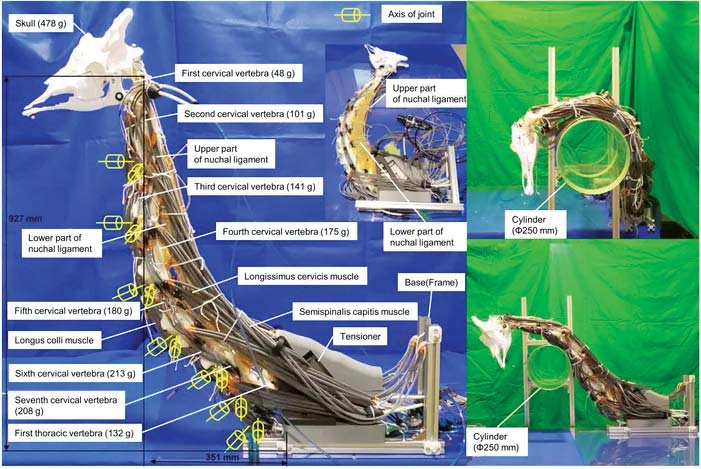

Researchers at the Tokyo Institute of Technology have been experimenting with mimicking something that’s an impressive example of both flexibility and power in the animal kingdom: the neck of the giraffe.

Giraffe necks can weigh up to 150 kilograms and be two meters long, but they’ve evolved to be bendy and strong. Not just to get those tasty high-growing leaves but also because male giraffes battle each other with their necks, sometimes to the death.

The neck of a giraffe has excellent characteristics that can serve as a good alternative for designing a large robotic mechanism. For example, the neck can rapidly move when performing “necking,” a motion where the giraffes strike each other’s necks, the researchers wrote in the abstract to their paper, referring to the neck-based combat which takes place between males as a way of asserting dominance. “Furthermore, the neck of a giraffe helps prevent impacts and adapts to the shape and hardness of the opponent’s neck during necking.”

The mechanism consists of a skeletal mechanism fabricated using a 3D printer, a gravity-compensation mechanism mimicking nuchal ligament using rubber material and tensioners, a redundant muscle driving system using thin artificial muscles, and a particular joint using rubber disks and mimicking ligaments.

The robotic giraffe neck does its best to mimic the structure of an actual giraffe neck with vertebrae and tendons arranged similarly, and thin McKibben pneumatic artificial muscles providing contracting forces the same way real muscles do. It’s complicated, but it enables a significant amount of flexibility.

Photo courtesy of Tokyo Institute of Technology.

The researchers suggest that developing a robotic system with a practical combination of power, flexibility, and control might be more straightforward to do with a giraffe neck model than an elephant trunk model. You’d sacrifice some softness and flexibility, but you might be able to actually make it work in a way that could execute the amount of force required to be useful in industrial applications.

The name of this robot is Giraffe Neck Robot #1, which (while not the most creative) suggest that other necks will follow. From the sound of things, the next step will be cranking up the power by several orders of magnitude by using hydraulic rather than pneumatic actuators.

Rock and Walk

People around the world have long been captivated by the moai: a collection of statues that stand sentry along the coast of Easter Island. The statues are well known not just for their immense size and distinct facial features, but also for the mystery that shrouds their geographic location. The question that piques everyone’s curiosity is how did ancient Rapa Nui people move these ginormous rocks — some weighing as much as 80 tonnes — across distances of up to 18 kilometers?

Back in 2011, a group of archaeologists made some progress in potentially unraveling this mystery. They conducted an experiment whereby three hemp ropes were tied to the head of a moai replica. Using two of the ropes angled at the sides to rock the statue back and forth and the third rope for guidance, they were able to “rock and walk” the replica forward, demonstrating at least one possible way that the Rapa Nui people may have moved the real moai so long ago. In the experiment, 18 people were able to move a 4.35 tonne replica 100 meters in just 40 minutes.

The “walking” megalithic statues of Easter Island. Photo courtest of the Journal of Archaeological Science/Elsevier.

More recently, a group of researchers sought to use robots to employ this “rock and walk” technique further. Jungwon Seo, an assistant professor at Hong Kong University of Science and Technology, has previously used robots to study how to precisely move and place Go stones. (Go stones are round objects placed on a board. They are colored either black or white and normally number 181 for black and 180 for white (or sometimes 180 for each).)

“Objects heavier than a Go stone, like the moai statue, may pose additional fresh challenges. But we were motivated by the difficulty,” he says.

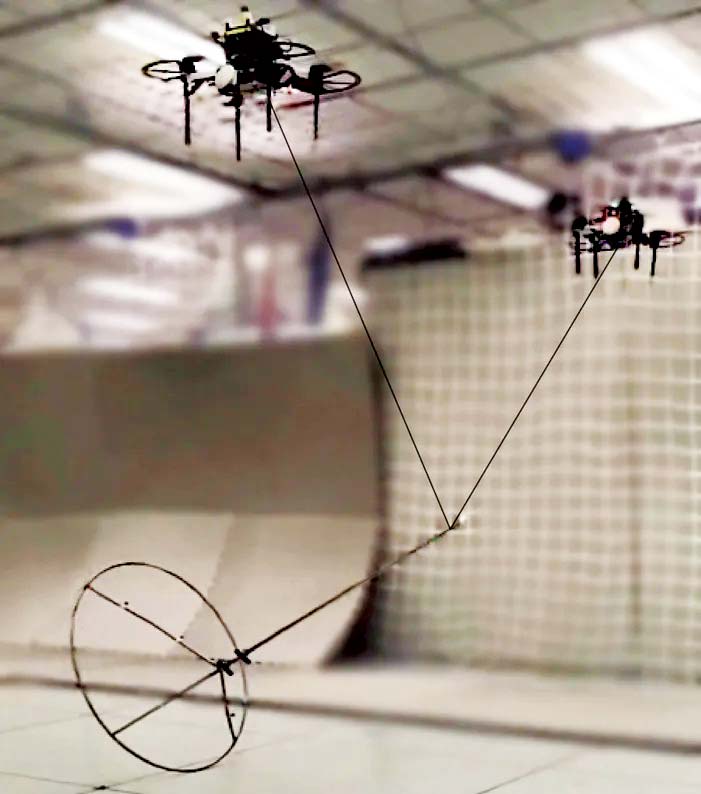

His team devised a “rock and walk” technique and implemented it four different ways using single or dual robotic arms, or single or dual custom-made quadrotor aircrafts.

In all the scenarios, the researchers used an object that had features similar to those of the moai such as a low center of gravity and a round edge along the bottom; the latter of which helps facilitate the dynamic rolling maneuvers. Wider bases allow objects to take bigger “steps” forward.

In the first experiment, a single robotic arm was used to rock and walk a weighted object with a wide base. The robotic arm used a small cage to hold the top of the object and manipulate it.

In another setup, two robotic arms — one on either side of the object — manipulated it using cables attached near its top (this scenario is most similar to how the archaeologists moved the moai replica).

Still shot from cable-driven dual quadrotor rock and walk.

Similar trials featured either one or two quadrotor aircraft. Regardless of how many points of contact are used to manipulate an object, the benefits of the rock-and-walk technique are undeniable. Because part of the object remains in contact with the ground, the robots need to bear only a fraction of the object’s weight using this technique.

For example, the experiments showed that, whereas the quadrotor aircraft needed to expend 100 percent thrust to lift a one kilogram payload, it only needed 20 percent thrust to perform the rock and walk technique with the same object. Mpst notably, the robots were also able to rock and walk objects on upward and downward slopes.

“If the object can’t be lifted for some reason, [for example, if a drone cannot manage the payload of an object], rock and walk might be a viable option,” says Seo.

Seo foresees these rock and walk techniques being helpful for scenarios when helicopters or other machines may not be sufficient to move objects. It could even be used when the object’s shape is not ideal.

“Even if an object proves unsuitable for rock and walk, there is still a possibility of adjusting its geometric and mass properties for successful rock and walk; for example, by attaching additional masses because the robot system does not have to bear the entire weight of the object,” Seo explained.

Avian Android

Have you ever looked at a little bird nestled in a tree and wondered how on earth they can land on tiny branches?

Well, wonder no more because two engineers have figured out exactly how and they’ve use their knowledge to create a cool new robot.

Mark Cutkosky and David Lentink have spent years developing the nature-inspired aerial grasper, known as SNAG. It’s a robot modelled on a peregrine falcon’s legs, and has the ability to perch on almost any surface.

The engineers hope that their avian android can help improve the range and abilities of drones. For example, improved landing technology may help drones store and conserve their battery life by perching on stable surfaces instead of hovering.

Photos courtesy of Lentik Lab / Will Roderick.

There’s also the possiblity it may help scientists collect data more easily in the natural environment, especially in dense or hard to reach forests.

“We want to be able to land anywhere — that’s what makes it exciting from an engineering and robotics perspective,” says David Lentink.

The robot is also able to catch objects like bean bags and tennis balls. What more could you want?!

Fatbergs No Match for Pipe-Worm

GE has upgraded its worm-like tunneling robot with highly sensitive whiskers — similar to a cockroach’s whiskers — that give it enhanced perception capabilities for industrial pipeline monitoring, inspection, and repair. (Cockroach whiskers are super sensitive, and are able to detect slight changes in the air and the environment around them.) GE’s tunneling robot debuted in May 2020, working its way through a makeshift tunnel inside of a research lab. Now GE Research — the technology development arm of GE — is showcasing what the robot can do in a real world setting.

The Programmable Worm for Irregular Pipeline Exploration, or “Pipe-worm,” is GE’s latest adaptation of its autonomous giant earthworm-like robot. It was recently demonstrated at the company’s research campus in Niskayuna, where it traveled over 100 meters of pipe.

During Pipe-worm’s demonstration, it used its whiskers to navigate turns, as well as changes in the pipe’s diameter and in altitude. The robot uses artificial intelligence (AI) and the sensory data it gathers from its whiskers to automatically detect turns, elbows, junctions, pipe diameter, and pipe orientation, among other things. The robot uses this information to create a map of the pipeline network in real time.

The GE research team and Deepak Trivedi (second from left) with Pipe-worm. Photo courtesy of GE.

“GE’s Pipe-worm takes the concept of the plumber’s drain snake to a whole new level,” Deepak Trivedi, a soft robotics expert at GE Research who led the development of Pipe-Worm, said. “This AI-enabled autonomous robot has the ability to inspect and potentially repair pipelines all on its own, breaking up the formation of solid waste masses like fatbergs that are an ongoing issue with many of our nation’s sewer systems. We’ve added cockroach-like whiskers to its body that gives it greatly enhanced levels of perception to make sharp turns or negotiate its way through dark, unknown portions of a pipeline network.”

Like the autonomous giant earthworm robot, Pipe-worm has powerful fluid-powered muscles which make it strong enough for heavy duty jobs.

GE Research’s Robotics and Autonomy team are looking to apply Pipe-worm’s capabilities to other inspection and repair applications, like jet engine and power turbines in the aviation and power sectors. According to Trivedi, this application would involve a scaled-down version of the robot.

ServeBot Ready to Roll

A rocky start, four years on, and several iterations later, CLOi is now ready for prime time, with the Korean company announcing the CLOi ServeBot is ready for the US market.

LG believes the semi-autonomous contraption is ideal for “complex commercial environments” such as restaurants, retail stores, and hotels.

Able to handle loads of up to 66 pounds, the 53 inch tall CLOi can operate for up to 11 hours on a single charge. ServeBot greets each encounter with a friendly face, complete with friendly cartoon-like eye animations presented on the unit’s top-mounted 9.2 inch touchscreen.

Don’t expect anything to happen in a hurry, though, as CLOi only has a top speed of 2.2 mph.

The robot can be programmed for a range of floor plans, “enabling precise multi-point deliveries ranging from densely packed restaurants to sprawling office complexes,” LG said, with a slew of sensors and cameras helping it to avoid collisions with passing staff and customers.

The Great Escape

Apparently tired of cleaning up after messy hotel guests, a Roomba recently made a dash for it and trundled to freedom.

The great escape took place at a Travelodge budget hotel in Cambridge, England.

A robot vacuum cleaner (similar to this one) got to the hotel door and just kept on going. Image courtesy Getty Images.

According to a now-deleted Reddit message posted by the hotel’s assistant manager, the robovac failed to sense — or simply ignored — the hotel entrance and just kept going.

Staff said it “could be anywhere” while well-wishers on social media hoped the vacuum enjoyed its travels, as “it has no natural predators” in the wild.

It was found under a hedge a few days later.

Pigeons vs. Drones

Feral pigeons are responsible for over a billion dollars of economic losses here in the US every year. They’re especially annoying because the species isn’t native to this country. They were brought over from Europe (where they’re known as rock doves) because you can eat them, but enough of the birds escaped and liked it here, so now there are stable populations all over the country being gross.

In addition to carrying diseases (some of which can occasionally infect humans), pigeons are prolific and inconvenient urban poopers, deploying their acidic droppings in places that are exceptionally difficult to clean. Rooftops, as well as ledges and overhangs on building facades, are full of cozy nooks and crannies, and despite some attempts to brute-force the problem by putting metal or plastic spikes on every horizontal surface, there are usually more surfaces (and pigeons) than can be reasonably be spiked.

Researchers at EPFL in Switzerland believed that besting an aerial adversary would require an aerial approach, so they deployed an autonomous system that can identify roof-invading pigeons and then send a drone over to chase them away.

The researchers noticed some interesting drone-on-bird behaviors:

Several interesting observations regarding the interactions of pigeons and the drone were made during the experiments. First, the distance at which pigeons perceive the drone as a threat is highly variable and may be related to the number of pigeons. Whereas larger flocks were often scared simply by takeoff (which happened at a distance of 40-60 m from the pigeons), smaller groups of birds often let the drone come as close as a few meters. Furthermore, the duration in which the drone stays in the target region is an important tuning parameter. Some pigeons attempted to return almost immediately but were repelled by the hovering drone.

The researchers, who published their work in a recent issue of the journal IEEE Access, suggest that it might be useful to collaborate with some zoologists (ornithologists?) as a next step, as it’s possible that “the efficiency of the system could be radically changed by leveraging knowledge about the behaviors and interactions of pigeons.”

A Real Softy

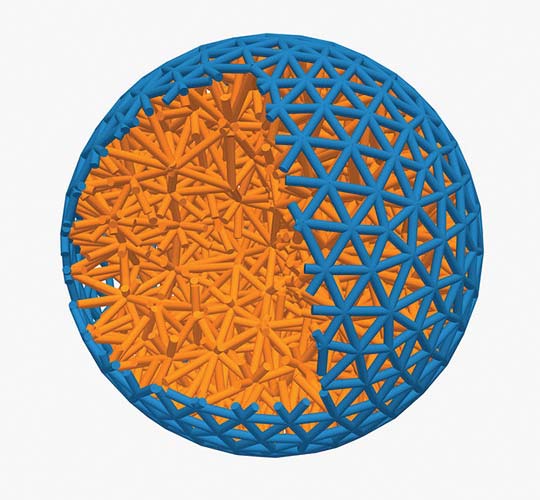

A research team from the Universities of Bath and Birmingham are hoping to reinvent the way we design robots.

Typically, robots (like robotic arms) are controlled by a single central controller. The research team, however, is hoping to create robots that are made from many individual units that act individually but cooperatively to determine the machine’s movement.

The researchers are doing this using active matter: collections of a large number of active agents that resist the way that ordinary soft materials move. Typical soft materials will shrink into a sphere to create the smallest surface area possible (think water beading into droplets).

Active matter can be used in many ways. For example, a layer of nanorobots wrapped around a rubber ball could distort the shape of the ball by working in unison.

“Active matter makes us look at the familiar rules of nature — rules like the fact that surface tension has to be positive — in a new light,” Dr. Jack Binysh, the first author on the study, said. “Seeing what happens if we break these rules and how we can harness the results is an exciting place to be doing research.”

A simulated ball covered in tiny robots that can distort its shape. Figure courtesy of the University of Bath.

During the study, the team simulated a 3D soft solid with a surface that experiences active stresses. The stresses expand the surface of the material, pulling the solid underneath with it. This can cause the entire shape to change. This change could even be tailored by altering the elastic properties of the material.

“This study is an important proof of concept and has many useful implications,” Dr. Anton Souslov, another author on the paper, said. “For instance, future technology could produce soft robots that are far squishier and better at picking up and manipulating delicate materials.”

Eventually, the scientists hope to develop machines with arms made of flexible material with tiny robots embedded in the surface. The technology could also be used to coat the surface of nanoparticles in a responsive active material. This would allow them to customize the size and shape of drug delivery capsules.

For now, the researchers have already started their next phase of work: applying the general principles they’ve learned to designing specific robots. The results of their study were published recently in Science Advances.

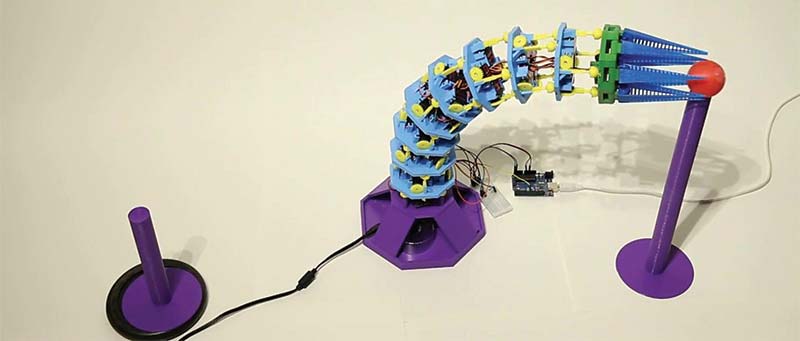

Trunk-Cated

Elephants are known for their long and powerful trunks, so a group of scientists created a robot that mimics the movement of the animal’s super long nose.

The team, based at the University of Tubingen in Germany, used 3D printing equipment to develop the robot trunk. It’s made up of individual segments which are stacked on top of one another. They are held together by special joints controlled by gears. The gears are driven by several motors and allow the trunk to tilt in all kinds of different directions. The robot can not only bend like an elephant’s trunk, but it can also extend or shorten in length.

In order for the scientists to control the trunk, they were able to input example instructions needed to move the robot in certain ways. This produced a specific algorithm. The team used the elephant trunk robot as proof for a new type of algorithm called a “spiking neural network” which works in a way very similar to real brains.

A small robot with a relatively basic computer could run a spiking neural network and continue to train itself when put to work, becoming more efficient or learning new tasks over time.

“Our dream is that we can do this in a continuous learning setup where the robot starts without any knowledge and then tries to reach goals, and while it does this it generates its own learning examples,” Dr Sebastian Otte, who worked on the project, explained to New Scientist.

Maicat Purr-fect for Feline Fans

Robotic pets are all the rage now, as they are cleaner, easier to care for, and overall more convenient to keep around the house. Robot dogs are usually the most popular ones, which is why Macroact wants to throw a bone to cat lovers too, with its adorable Maicat.

The Korean robotics startup recently unveiled the companion robot cat with AI (artificial intelligence), boasting about its ability to play right out of the box and generate personalized experiences for each user.

Designed as a cute and interactive companion, Maicat is an intelligent and evolving robotic animal equipped with OLED eyes, 20 actuators, a camera, microphone, a speaker, and nine sensors. All of them help it analyze and recognize its surrounding environment, as well as communicate with its owner. The gadget comes with Bluetooth, Wi-Fi connectivity, and a dedicated app.

Maicat is made from a blend of ABS, glass, and silicone. It weighs 1 kg (2.2 lb) and measures 20 cm (7.8”) in length, 13 cm (5.1”) in width, and 20 cm (7.8”) in height. Macroact doesn’t mention the capacity of the cat’s lithium battery, but it does specify that Maicat has self-charging capabilities.

Ham Ham It Up

Meet robotic nibbler Amagami Ham Ham, a robot designed to bite the end of your finger in a cute and reassuring way. It’s the work of Yukai Engineering, the same company responsible for Qoobo (the tailed cushion that made its debut last year) and Liv Heart Corporation. (See below for more details on Qoobo.)

There are two characters in the Amagami Ham Ham series: Yuzu the cat and Kotaru the Shiba Inu dog, both based on characters from Liv Heart’s Nemu Nemu series of plush toys. As an FYI, Amagami in Japanese means “soft bite,” while ham also means bite, but could be considered a cute way of saying so when said twice. Nemu nemu means sleepy, again in a similarly cute fashion. With that quick lesson out of the way, let’s talk about the biting.

Both Ham Hams sit about eight inches high, so are suitably small and cute looking. Put your finger in Yuzu or Kotaro’s mouth and it will begin to nibble on it. The robot’s biting action is driven by HAMgorithms (yes, that’s what they’re called here) that generate 24 different methods of biting your finger, including modes called Tasting HAM, Holding Tight HAM, and Massaging HAM. The type of bite used will be random.

At this point, you’re probably wondering why anyone would want to do this.

Well, apparently the biting motion is designed to recreate the sensation of having the tip of your finger nibbled by a pet or a baby. While human or animal babies eventually abandon the activity, Amagami Ham Ham will always be there to cutely nibble your finger, providing a degree of closeness and reassurance.

Whatever you think of any well-being benefits that come from having your fingers nibbled, it’s doubtful you’ve seen anything like Amagami Ham Ham before.

Yukai Engineering and Liv Heart Corporation are using crowdfunding to make Amagami Ham Ham a reality, and the campaign is due to go live this spring. The price has yet to be decided.

Qoobo

There are two types of Qoobo: the full-size version with its long tail; and the smaller Petit Qoobo with its stubby tail. When you stroke either Qoobo, the tail will move, and the speed and movement differs depending on the motion and force of your stroking. There are other differences between them. Petit Qoobo’s tail moves at a faster pace and in more varied ways, while Qoobo has a lazier personality until you pat the body, when the tail moves more frantically.

Petit Qoobo has a small heartbeat you can feel when it’s sitting on your lap or being held in your hands. The tail also reacts to sudden sounds like a clap or a laugh, while Qoobo’s tail will move of its own accord to greet you or to remind you it’s there. Neither make their own noises, but the motor driving the tail does have a very distinct sound.

Qoobo doesn’t really need much attention outside of charging. The internal battery lasts for about a week with the pair activated each evening, but not during the day. A USB charging lead is included, and it plugs into a rather private area of Qoobo’s body. The fluffy coats can be removed and washed if they get grimy.

Wasps Be Gone

A company in Japan has unveiled a new contraption that is basically a drone with a vacuum cleaner attached. Rather than using it for cleaning hard-to-reach surfaces, the machine is designed to remove troublesome wasp nests.

Duskin Company is a cleaning and pest control firm based in the city of Osaka about 250 miles southwest of Tokyo. They say its machine significantly improves the safety of this hazardous task. Around 15 people die every year while attempting to carry it out manually, according to The Mainichi.

The modified quadcopter features a funnel and a hose that leads to a cylinder into which the bees and parts of the nest are collected. The setup allows the pest controller to stand a safe distance from the nest while gradually removing it using the drone.

A recent demonstration of the technology took about two hours to remove a single nest, including the manual cleanup work at the end. The wasp nest (about 35 centimeters in diameter) was hanging from the eaves of a two-story warehouse in the deep mountainous city of Yabu in the northern part of Hyogo Prefecture. The drone is 80 centimeters wide.

The specialized drone would be particularly useful in scenarios where the nest is in a location that’s hard for humans to access. In fact, Duskin said that on some occasions before it developed the drone, it had to pass on extermination jobs where the nest was in a position too difficult to safely reach.

Yusuke Saito, who is in charge of drone development at Duskin, explained, “When wasps recognize an enemy, they secrete an alarm pheromone and attack in groups. By attaching pheromones to the drone, they will gather at the machine and can be exterminated efficiently.”

Robotic Crack Sealer

As a system integrator, Pioneer has partnered with industry leaders to source the top components for the Robotic Crack Sealer. Because roadwork can be dangerous, the Robotic Crack Sealer was developed to save lives by moving road workers out of harm’s way.

Beginning with Seal Master as a partner, the company is a one-stop source for all pavement maintenance products and equipment with locations all across the US. FANUC is the global leader in automation solutions, as the largest industrial robot manufacturer with over half a million robots deployed around the world.

Vista Solutions is a machine vision solution provider specializing in vision guided robotics with custom developed smart and AI powered vision solutions.

The Robotic Crack Sealer is outfitted with a SealMaster CrackPro custom melter, retrofitted with electric driven agitation and a dispense pump, jacketed hoses, and a custom EOAT dispense nozzle.

A FANUC America Corporation six-axis robot equips the Robotic Crack Sealer with extensive reach and payload capabilities. Rated for over 100k hours, FANUC robots have been withstanding harsh manufacturing conditions for decades. These robots are reliable, repeatable, and dependable.

Vista Solutions has developed a smart vision system equipped with 3D line scanners and lasers. It’s located under the collapsible shroud, which keeps light sources from interfering. The scanned pavement data is sent to a PC and uses a series of algorithms to accurately identify and discern which cracks require sealant. The PC then processes the image and sends the correct data points on the road to the robot.

Look Ma, No Steering Wheel

Alphabet’s self-driving car company, Waymo has unveiled a design for a self-driving robotaxi without a steering wheel and pedals.

The autonomous vehicle specialist announced that it’s partnered with Chinese automaker Geely to build a Zeekr minivan filled entirely with passenger seats. It’s worth pointing out that even though Zeekr is a relatively new brand, Waymo still chose Zeekr as its following ride-sharing robotaxi fleet manufacturer.

The all-electric, self-driving minivan will be designed and developed in Gothenburg, Sweden, before being added to Waymo’s fleet “in the years to come,” according to the US company.

Waymo said the new vehicle features “a flat floor for more accessible entry, easy ingress and egress thanks to a B-pillarless design, low step-in height, generous head room and leg room, and fully adjustable seats.”

It added that riders traveling inside the Zeekr will also experience “screens and chargers within arm’s reach, and an easy to configure and comfortable vehicle cabin.” In the car’s front, a touchscreen is mounted in the middle of the instrument panel.

The prototype still features manual controls, as Waymo will be responsible for installing its Waymo Driver self-driving system in the shuttles before they are added to the Waymo fleet. The system is ranked at Level 4 on the SAE scale of self-driving capability since it can drive on its own for extended periods, though only with restrictions. Level 5 is the ultimate goal, as this represents a system that can drive at the same level as a human.

While Waymo appears keen to add the Zeekr minivan to its current fleet of autonomous vehicles that are undergoing testing on public roads, safety regulators will have the final say on whether to give the plan the green light, hence no firm timeline from Waymo.

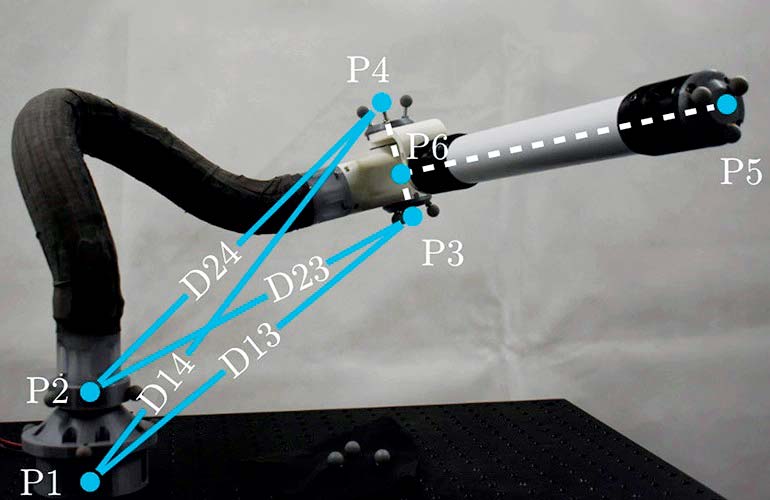

Armed with AR

Researchers at the Imperial College London have created a malleable robotic arm. The arm can be guided into shape manually using mixed reality smart glasses and through motion tracking cameras to ensure precise positioning.

Many robotic arms are made with firm joints and limbs to ensure strength, but the researchers wanted to create an arm that is flexible enough to be used in multiple applications.

The arm is made malleable with layers of slippery mylar sheets which slide over each other and can be locked into place when the arm is in the correct position. The user is able to see templates using the augmented reality (AR) goggles, which superimposes the correct positioning onto the user’s environment. When the arm is in the correct place, the template turns green.

The arm is rigid from P6-P5 and has a joint at P6; the lower part of the arm is malleable.

The arm has several advantages over more traditional robotic arms. First, it’s lightweight, which makes it ideal for space travel where every extra pound counts. It’s also gentle, so it could be used as an extra hand in injury rehabilitation. The researchers hope the arm can be useful in manufacturing and vehicle and building maintenance as well.

Researchers decided to use AR to guide manipulations of the arm so that it could be done by people not familiar with robotics. They tested the system on five men aged 20-26 who had experience in robotics but not in manipulating malleable robots. Each of them were able to move the arm into the correct positioning.

Although the pool of participants was narrow, the researchers say their initial findings show that AR could be a successful approach to adapting malleable robots following further testing and user training.

The researchers — Dr. Nicolas Rojas and PhD researcher Angus Clark of the Dyson School of Design Engineering, and Ph.D. researcher Alex Ranne of the Department of Computing — published their results in the IEEE Robotics and Automation Magazine.

The green outline of the arm shows where it should be positioned. Photos courtesty of Imperial College London.

“One of the key issues in adjusting these robots is accuracy in their new position. We humans aren’t great at making sure the new position matches the template, which is why we looked to AR for help,” Dr. Rojas explained. “We’ve shown that AR can simplify working alongside our malleable robot. The approach gives users a range of easy-to-create robot positions for all sorts of applications without needing so much technical expertise.”

The next steps will involve the researchers adding touch and audio elements to the AR to increase accuracy in positioning the robot. They’re also interested in strengthening the robot to make them more rigid when locked into position.

Article Comments