AI, Chatbots, and More

By John Blankenship View In Digital Edition

Artificial Intelligence (AI) seems to be all over the news. This two-part series looks first at how a simple simulation can provide options for experimentation with AI. The second article will move on to explore how modern chatbot technologies differ from conventional approaches and how AI might affect the future of hobby robotics.

AI has its origins as far back as the 1950s. It progressed slowly over the years in several different directions. Many of the usable programs in the early days utilized a cause-and-effect database that could be searched for a match for the problem at hand. There was even a chatbot called ELIZA in the 1960s, but its capabilities paled when compared to the human-like engines that have evolved in the last few years.

In the next article in this series, we’ll explore some of the current AI systems and how they differ from earlier approaches, as well as how the new systems might affect the field of hobby robotics as we know it. For now, though, let’s explore some basic principles of AI to provide a glimpse of how machine learning can happen on a very basic level.

A Mobile Robot Example

If we’re talking about a simple mobile robot, for example, AI might be described as a system capable of making the robot interact with its environment in a reasonable way. A reasonable way could mean reacting as a human would in a similar situation, but there are many situations that require much less robust capabilities.

For example, if we have a robot whose goal is to explore its environment, we might equip it with sensors so that it can detect objects that might get in its way and give it the capability to perform maneuvers to avoid any objects that have been detected. This is certainly not a complex problem, but we don’t want something overly difficult if we’re going to explore examples in a short magazine article.

A controlling program could handle this task by just reading sensor data, comparing it to specific patterns, and then reacting in an appropriate way. For example, a robot might turn left and slightly right of its current heading if it detects an obstacle ahead.

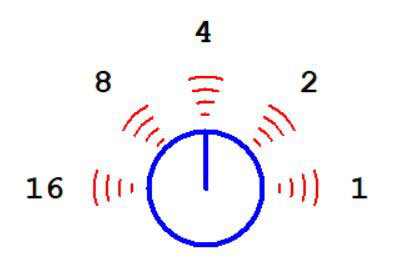

Look at the simulated robot in Figure 1.

Figure 1.

It’s part of the robot simulator featured in RobotBASIC, a free language available at www.RobotBASIC.org. The simulated robot has five perimeter sensors that can detect objects around the front half of the robot. The numbers shown are the values returned when each of the sensors detects an object. They were chosen because they represent the value of the bit positions in a five-bit binary number. For instance, if only sensors 2 and 4 detect objects, then the summation value of 6 would be returned as the sensory reading.

A Simple Coding Solution

Let’s look at a specific example to see how programming statements can control a robot’s behavior. If sensor 2 is active, it means an object has been detected that is ahead and right of the robot’s heading.

A reasonable response for this condition would be for the robot to turn left to avoid the object. We could make all this happen using the single RobotBASIC statement:

if rFeel()=2 then rTurn -10

The rFeel() function returns the robot’s perimeter sensor readings and if its value is 2, the statement tells the robot to turn 10 degrees to the left using the rTurn command. Of course, we would need multiple if statements to detect all the potential collision situations and create appropriate movements for each situation to avoid the objects.

The robot could improve its ability to appear intelligent if it had even more sensory capability. For example, if the robot can measure the distance to objects that it detects, it could use that information to determine how quickly to turn instead of using a fixed turn angle of -10 degrees.

Such a system could certainly make the robot appear to be somewhat intelligent, but all the machine’s capabilities should be attributed to the programmer, not the robot itself. It’s up to the programmer to determine which sensor patterns might require an action, as well as what actions should be taken when those conditions are detected.

A Changing Environment

Under controlled conditions (a specific unchanging environment, for example), the above approach can be very effective. However, if the environment changes over time (imagine a potted plant that is moved or grows to a larger size), then the robot could encounter situations that were not anticipated by the programmer. In such a situation, the robot might react improperly or perhaps not at all.

The more sensory capabilities the robot has and the more complex its environment, the more difficult it becomes for a human programmer to anticipate all the relevant sensory patterns as well as the actions that should be invoked.

If we want a robot that can handle situations that have not been anticipated, we need to give the robot the ability to learn how to deal with new problems on its own. Such a machine could still have its limitations.

Its sensors, for example, might not detect small objects and the robot could have maneuverability issues.

If the robot can learn on its own, however, it will at least have the possibility of dealing with situations that were never anticipated by the programmer.

The Ability to Learn

One simple way to implement a learning capability would be to have the robot react to new sensory conditions by trying a random action. When the action is complete, the robot needs a way to determine if its situation has improved or not. If it has improved, then the sensory condition and the action that has been shown to improve that situation should be stored in the robot’s memory so that if that sensory condition occurs again in the future, the robot will know how to respond.

Over time, the robot should eventually find reasonable solutions to all the sensory conditions that will occur. It has been my experience that if I study the robot’s memory, I sometimes find situations that I might not have anticipated.

This idea is primitive for sure and there are many ways we might improve it, but for now let’s see how we can implement these simple principles in a RobotBASIC program that will allow the simulated robot in Figure 1 to learn how to avoid obstacles on its own. Let’s start by establishing what we expect our program to do.

Defining Our Programming Goals

First, the goal of the simulated robot will be to explore a limited environment that contains various obstacles that might obstruct its movements. Ideally, the environment will be somewhat random so we can test the robot under different conditions. To make this project a little more exciting, we can place some food in the environment so that the robot has a reason to explore.

If none of the robot’s perimeter sensors are triggered, the robot should simply move forward slightly and look for food. If the robot sees food directly in front of it, the robot should move toward it and eat the food unless it encounters an obstacle blocking its path. If no food is seen directly ahead, it should randomly look a little to the left or right.

Anytime the robot is moving forward, it should monitor its sensors. When some pattern occurs (an obstacle blocking the path), the robot should check its memory to see if an approved action has been associated with that pattern. If one exists, the robot should perform that action.

If there is no action stored in memory for that situation, we want the robot to learn how it should handle that situation (the sensory pattern indicating an object has been detected).

A Learning Algorithm

If there’s nothing stored for a current situation, then the robot should randomly choose an action to try. If that action results in a new sensory pattern that is an improvement over the previous one, then the action that was tried should be stored in memory to be used if the original sensory situation occurs in the future.

A critical question is how does the robot know if the random movement improved its situation. Obviously, the better the robot can do this, the more effective the learning will be. It’s important though, that the decision criteria is not overly complex. Ideally, it should be as simple as possible. For this project, I chose to consider only how many perimeter sensors are triggered. Let me explain.

When the robot encounters an obstacle, it should make note of how many sensors are triggered. After making a random turn, if the number of sensors currently triggered is less than before the turn, then the robot will assume the new situation is less blocked than before the move.

The important point is that the programmer is not deciding what the robot should do for any particular situation. The robot is only given a vague definition of an improved condition; one that is easily determined by the robot. The important question is, will these criteria be sufficient to make the robot learn.

Writing the Program

To keep things simple, we’ll only use the three middle sensors on the simulated robot (refer again to Figure 1). We can isolate those sensors by shifting the five-bit pattern to the right one position and masking off the MSB. Using only three sensors makes sense since the side sensors don’t detect objects that are directly in the robot’s path. As for actions the robot can take (other than moving forward), there are really only two: turn left or turn right.

Figure 2 shows the main part of the program.

main:

NumEnvironments = 5

gosub Initialization

for E = 1 to NumEnvironments

gosub Environment

gosub Search

delay 2000

next

xyString 330,300,”ALL DONE”

end

Figure 2.

Normally, the program will stop when all the food has been eaten. To allow more time and a variety of environments for learning, the program allows you to specify how many different environments to use (each one is randomly different).

The subroutines for Initialization and drawing the Environment are not shown because of space limitations but are in the downloads for this article.

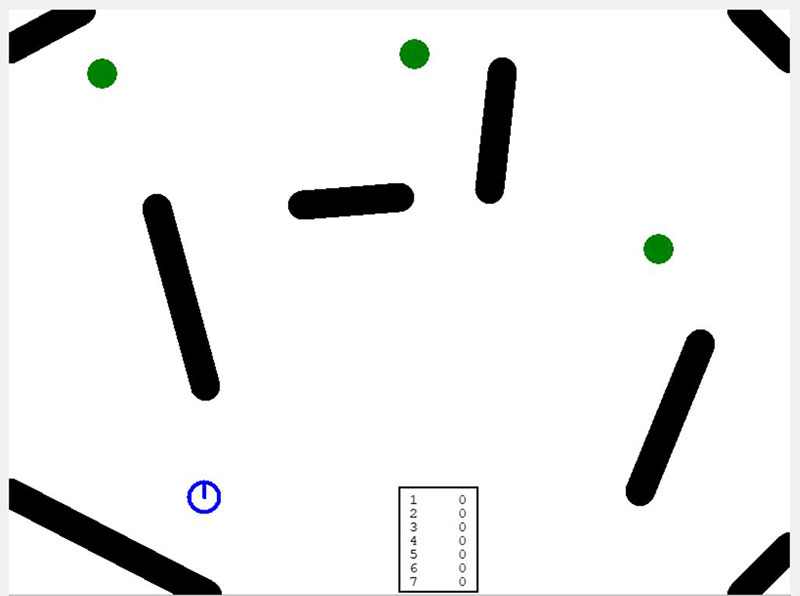

Figure 3 shows one of the environments.

Figure 3.

It’s composed of obstacles of random lengths and orientations. Notice also there are three green food plots. At the bottom of the screen you can see the values stored in the robot’s memory.

Each time all the food has been eaten, a new environment will be created so the robot can continue to learn how to navigate as it searches for food. The value of the variable NumEnvironments controls how many environments to use. The routine Search does all the hard work.

It’s shown in Figure 4 and is well commented to make it easier to analyze.

Search:

//stepping on

while FoodCnt // search till food is gone

Senses = (rFeel()>>1)&7 // use only front 3 sensors

if Senses=0 // no obstacles, move forward looking for food

RepeatCount =0 // to prevent getting stuck

rForward Dist

if rLook(0)=Green // look straight ahead for food

// food seen, so eat it if it is close

if rBeacon(Green)<30 // eat food

x = rGPSx() // erase the food eaten

if x<200

call DrawCircle(x1,y1,white)

FoodCnt--

elseif x<550 and x>230

call DrawCircle(x2,y2,white)

FoodCnt--

else

call DrawCircle(x3,y3,white)

FoodCnt--

endif

endif

else // food is not seen so look around

if random(2) // turn to one side or the other

rTurn 2 +random(10)

else

rTurn -(2+random(10))

endif

endif

else // obstacles have been detected

// check to see if current situation is in memory

if Mem[Senses]

// some action has been saved

// do the saved action (either left or right

if Mem[Senses] = TurnLeft then rTurn -Deg

if Mem[Senses] = TurnRight then rTurn Deg

//See if this action is repeating do an about face

RepeatCount++

if RepeatCount=10 then rturn (150+random(60)) // boredom

else // no memory for this situtation

// try to find an action that will reduce the obstruction

// and if found, save it in memory

Cnt=0

call CountSensors(Senses,Cnt)// count the triggered sensors

OldSenses = Senses // remember the offending sensors

r=random(2)+1 // pick a random action (1 or 2)

// and perform that action

if r=TurnLeft then rTurn -45

if r=TurnRight then rTurn 45

Senses = (rFeel()>>1)&7 // new sensor reading

NewCnt=0

call CountSensors(Senses,NewCnt) // count triggered sensors

// if action r reduced blockage then save action

if NewCnt<Cnt then Mem[OldSenses]=r\gosub DisplayMem

endif

endif

wend

return

Figure 4.

Most of the code in Figure 4 is inside a loop so it’s executed over and over until all the food is eaten. If there are no obstacles in the robot’s path, the robot moves forward and looks directly ahead for food. If food is found, the robot will eat it if it’s within reach. If no food is seen, the robot will look around by turning slightly left or right.

If obstacles are blocking the robot’s path, then the memory is checked to see if a learned action has been saved. The only options are turn left or turn right and if one is specified, the robot will execute it using a predetermined angle (10˚ is the default value specified in the Initialization subroutine).

In some environments, it could be possible for the robot to find itself stuck in a loop as it oscillates between left and right turns. To prevent this, the robot counts the number of times saved turns are executed. If that number reaches 10, the robot will perform a random 180˚ turn around. Note: The count is automatically reset when the robot is moving without detecting an obstacle. You can think of this action as a genetic reaction to boredom.

If no action is stored in memory for the current situation, then the robot will randomly turn right or left and then check to see if its situation has improved. In this case, the turns will be 45˚ because the perimeter sensors are 45˚ apart and we want to ensure the turn will cause a change in the sensor reading.

Both before and after the random turn, the robot will use the function CountSensors() to count the number of perimeter sensors that were triggered in each case. If the turn results in less sensors being triggered, then the chosen action will be stored in memory.

Normally in a program like this, I might choose to make the memory a two-dimensional array so that each location could hold the sensory condition and the recommended action. In this case though, with only three sensors, there are only eight possible sensor patterns.

For that reason, I made the robot’s memory a one-dimensional array, Mem[], that holds only the recommended actions. The array positions themselves are indexed by the associated sensor pattern.

When the program is run, the robot learns the proper responses usually within two environments (objects on the right will cause left turns and objects on the left will cause right turns). When symmetrical sensor patterns such as 2 (0102) or 5 (1012) occur, the robot appears to remember the first thing it tries, which is not wrong as there are no best moves for those situations.

Challenges

You can get the full program from the article downloads but to run it, you’ll need a copy of RobotBASIC. As noted earlier, a free download is available at www.RobotBASIC.org.

Try modifying the program on your own to see if you can improve on its capabilities. You might, for example, have the robot check each time it makes a saved action to see if it still results in an improved situation, and if not, erase that memory so it can be relearned.

This kind of action would be vital if new environments have unique situations that might alter how the robot should handle various conditions.

The RobotBASIC simulated robot has many different types of sensors, so if this project excites you, try giving the robot more sensory capabilities and see if you can make it become even smarter.

Next Time

Now that you have seen some conventional ideas about how a rudimentary level of intelligence can be programmed into a robot, we need to look at the approach used by modern chatbots such as ChatGPT.

Next time, we’ll get a better understanding of how they work and how their capabilities might change hobby robotics as we know it. SV

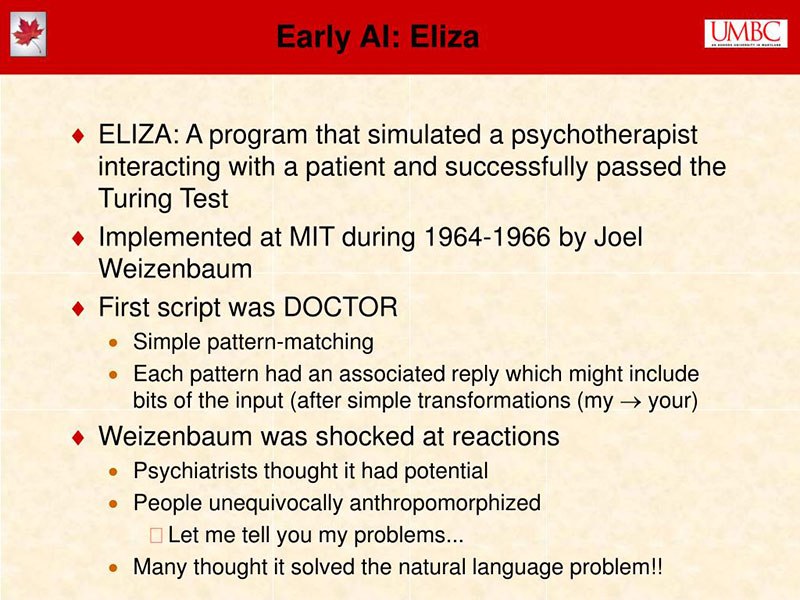

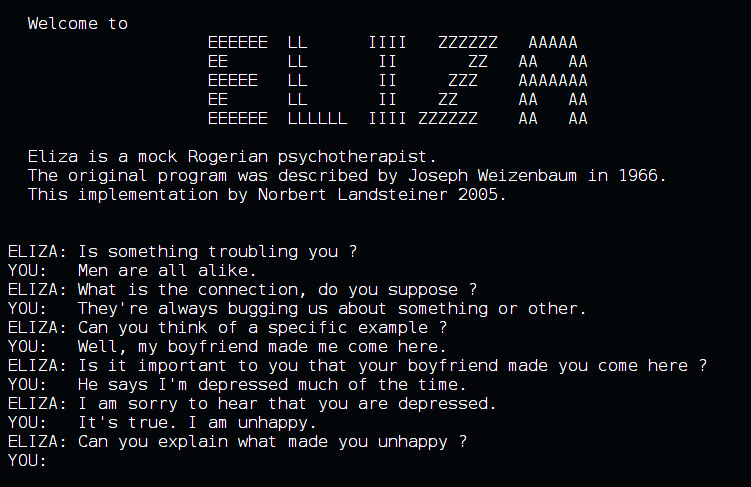

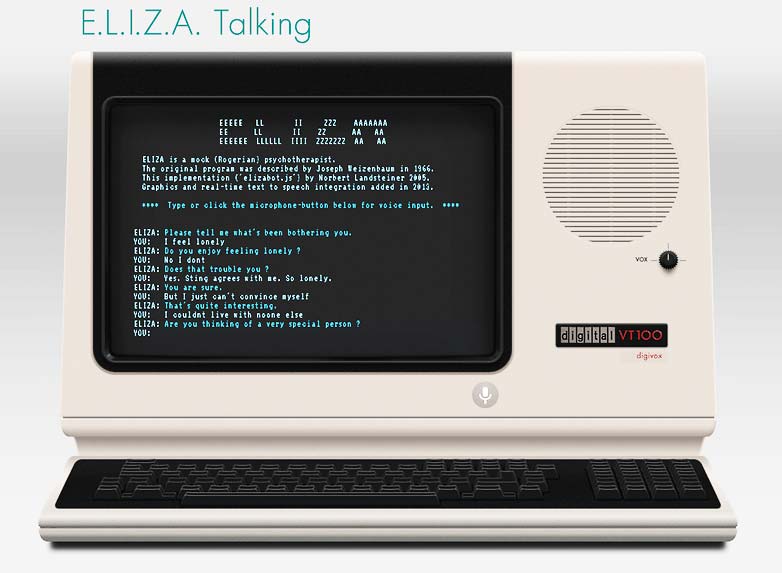

A Conversation with Eliza

ELIZA is an early natural language processing computer program developed from 1964 to 1967 at MIT by Joseph Weizenbaum. Created to explore communication between humans and machines, ELIZA simulated conversation by using a pattern matching and substitution methodology that gave users an illusion of understanding on the part of the program, but there was really no clear understanding going on.

The ELIZA program itself was (originally) written in MAD-SLIP (SLIP stands for Symmetric LIst Processor). The pattern-matching directives that contained most of its language capability were provided in separate “scripts” popularized by the programming language Lisp.

The most famous script, DOCTOR, simulated a psychotherapist of the Rogerian school (in which the therapist often reflects back the patient’s words to the patient), and used rules dictated in the script to respond with non-directional questions to user inputs. As such, ELIZA was one of the first chatterbots (“chatbot” modernly) and one of the first programs capable of attempting the Turing test.

Weizenbaum intended ELIZA to be a method to explore communication between humans and machines. He was surprised and shocked that individuals — including Weizenbaum’s secretary — attributed human-like feelings to the computer program. Many academics believed that the program would be able to positively influence the lives of many people — particularly those with psychological issues — and that it could aid doctors working on such patients’ treatment.

While ELIZA was capable of engaging in discourse, it could not converse with true understanding.

Many early users were convinced of ELIZA’s intelligence and understanding, despite Weizenbaum’s insistence to the contrary. The original ELIZA source code had been missing since its creation in the 1960s as it was not common to publish articles that included source code at this time.

However, more recently, the MAD-SLIP source code has now been discovered in the MIT archives and published on various platforms such as https://archive.org/.

The source code is of high historical interest as it demonstrates not only the specificity of programming languages and techniques at that time, but also the beginning of software layering and abstraction as a means of achieving sophisticated software programming.

Courtesy of Wikipedia; https://www.nextpit.com/tbt-early-chatbot-eliza; https://www.slideserve.com.

Article Comments